Learn how Azure AI Speech can help you create engaging and accessible applications with speech capabilities.

Azure AI Speech is a cloud-based service that offers a range of speech-related features, such as speech recognition, speech synthesis, speech translation, and speaker identification. With Azure AI Speech, you can easily add speech functionality to your applications, websites, and devices, and enable natural and intuitive interactions with your users.

What can you do with Azure AI Speech?

Azure AI Speech provides a variety of speech services that can be used for different purposes and scenarios. Here are some examples of what you can do with Azure AI Speech:

- Speech recognition: You can convert spoken audio into text, and analyze the content and intent of the speech. You can use speech recognition to enable voice commands, voice search, dictation, transcription, and more.

- Speech synthesis: You can convert text into natural-sounding speech, and customize the voice, language, and style of the speech. You can use speech synthesis to create audio books, podcasts, voice assistants, chatbots, and more.

- Speech translation: You can translate speech from one language to another, and enable real-time or offline communication across languages. You can use speech translation to create multilingual applications, websites, and devices, and facilitate cross-cultural interactions.

- Speaker identification: You can identify and verify the identity of a speaker based on their voice characteristics. You can use speaker identification to create biometric authentication, personalized experiences, and voice analytics.

Why should you use Azure AI Speech?

Azure AI Speech offers many advantages and benefits for developers and users who want to leverage speech capabilities in their applications. Here are some reasons why you should use Azure AI Speech:

- It is easy to use: You can integrate Azure AI Speech into your applications with simple and flexible APIs, SDKs, and tools. You can also use pre-built models or customize your own models with minimal coding and training.

- It is scalable and reliable: You can use Azure AI Speech to process large volumes of speech data with high performance and availability. You can also use Azure AI Speech to handle different types of speech scenarios and environments, such as noisy or low-quality audio, multiple speakers, or different accents and dialects.

- It is secure and compliant: You can use Azure AI Speech with confidence, as it follows the highest standards of security and privacy. You can also use Azure AI Speech to comply with various regulations and policies, such as GDPR, HIPAA, and SOC.

- It is affordable and cost-effective: You can use Azure AI Speech with flexible and transparent pricing plans, and only pay for what you use. You can also use Azure AI Speech to reduce the costs and complexity of developing and maintaining speech applications.

Azure AI Speech is a powerful and versatile tool that can help you create engaging and accessible applications with speech capabilities. To learn more about Azure AI Speech and how to get started, visit this link.

Now that we know what Azure AI Speech is, what you can do with it and why you should use it, let’s see how we can easily use the APIs and SDKs to build a speech enabled App.

Provision an Azure resource for speech

Before you can use Azure AI Speech, you need to create an Azure AI Speech resource in your Azure subscription. You can use either a dedicated Azure AI Speech resource or a multi-service Azure AI Services resource. Create new resource in a Resource group and search for ‘Speech’ and choose the Microsoft Speech service. Choose your Azure region where the service should be used, fill in a name and choose a pricing tier (Free or Standard). Find the pricing info here.

After you created your resource, you’ll need the following information to use it from a client application through one of the supported SDKs:

- The location in which the resource is deployed (for example, westeurope)

- One of the keys assigned to your resource.

You can view of these values on the Keys and Endpoint page for your resource in the Azure portal.

Using the Azure AI Speech SDK

Before we can start using the different APIs within the SDK, we need to request a token from our Azure AI Speech resource, using our key and region. This can be done using the issueToken method of the Azure AI Speech endpoint. The endpoint itself is build-up using the region your resource is provisioned to. Because from a Security perspective the key to our resource should never be exposed, issuing a token should always been done server side. So if we use the JavaScript SDK from a browser, the token should always been issued through a server part. In my case I use an Express NodeJs server to do this.

An example of issuing a token from JavaScript could look like this:

app.get('/api/get-speech-token', async (req, res, next) => {

res.setHeader('Content-Type', 'application/json');

const speechKey = process.env.SPEECH_KEY;

const speechRegion = process.env.SPEECH_REGION;

if (speechKey === 'paste-your-speech-key-here' || speechRegion === 'paste-your-speech-region-here') {

res.status(400).send('You forgot to add your speech key or region to the .env file.');

} else {

const headers = {

headers: {

'Ocp-Apim-Subscription-Key': speechKey,

'Content-Type': 'application/x-www-form-urlencoded'

}

};

try {

const tokenResponse = await axios.post(`https://${speechRegion}.api.cognitive.microsoft.com/sts/v1.0/issueToken`, null, headers);

res.send({ token: tokenResponse.data, region: speechRegion });

} catch (err) {

res.status(401).send('There was an error authorizing your speech key.');

}

}

});

While the specific details vary, depending on the SDK being used (Python, C#, and so on); there’s a consistent pattern for using the Speech to text API:

- Use a SpeechConfig object to encapsulate the information required to connect to your Azure AI Speech resource. Specifically, its location and key.

- Optionally, use an AudioConfig to define the input source for the audio to be transcribed. By default, this is the default system microphone, but you can also specify an audio file.

- Use the SpeechConfig and AudioConfig to create a SpeechRecognizer object. This object is a proxy client for the Speech to text API.

- Use the methods of the SpeechRecognizer object to call the underlying API functions. For example, the RecognizeOnceAsync() method uses the Azure AI Speech service to asynchronously transcribe a single spoken utterance.

- Process the response from the Azure AI Speech service. In the case of the RecognizeOnceAsync() method, the result is a SpeechRecognitionResult object that includes the following properties:

- Duration

- OffsetInTicks

- Properties

- Reason

- ResultId

- Text

If the operation was successful, the Reason property has the enumerated value RecognizedSpeech, and the Text property contains the transcription. Other possible values for Result include NoMatch (indicating that the audio was successfully parsed but no speech was recognized) or Canceled, indicating that an error occurred (in which case, you can check the Properties collection for the CancellationReason property to determine what went wrong).

From a code perspective this could look something like this for a JavaScript frontend:

const speechToTextFromMic = useCallback(async () => {

const tokenObj = await getTokenOrRefresh();

const speechConfig = speechsdk.SpeechConfig.fromAuthorizationToken(tokenObj.authToken, tokenObj.region);

speechConfig.speechRecognitionLanguage = speakingLanguage;

const audioConfig = speechsdk.AudioConfig.fromDefaultMicrophoneInput();

const _recognizer = new speechsdk.SpeechRecognizer(speechConfig, audioConfig);

setDisplayText('');

// Set up the recognized event to start capturing the recognized text.

_recognizer.recognized = onRecognized;

// Start the continuous recognition/translation operation.

_recognizer.startContinuousRecognitionAsync();

// Set recognizer value to state so we can stop it later

setRecognizer(_recognizer);

// Set translating to true so we can show the user we are translating

setTranslating(true);

}, [speakingLanguage, onRecognized]);

Notice we are getting our token first using our backend (getTokenOrRefresh). Then we setup our SpeechConfig. You have two options here: using the fromAuthorizationToken or fromSubscription. Because we are calling the Speech API from a browser through JavaScript, we retrieve our token through a server side API, so we don’t expose our key. If you are running above from a server, you could use the fromSubscription method using the subscription key and region from your Azure AI Speech service.

If we would like to do it the other way around, converting text to speech, the process is pretty much the same with using a speechConfig and audioConfig, but instead of using the SpeachRocognizer object, you use the SpeechSynthesizer object. Next to the speechConfig and audioConfig, you also need to define a SpeakerAudioDestination object, which is used to playback the text as speech. In the audioConfig, we specify we use the speaker as an audio output (default speaker of the browser). With the synthesizer object, you can call the speakTextAsync method. Here you supply the text string to speak and a function callback with a given result. With this result you could check if the synthesising process is complete or canceled and do some more logic here and close the synthesizer and dispose it. Because the audioConfig is set to fromSpeakerOutput the speakTextAsync method is spoken automatically through your speakers. From a code perspective this could look something like this for a JavaScript frontend:

const textToSpeech = useCallback(async () => {

const tokenObj = await getTokenOrRefresh();

const speechConfig = speechsdk.SpeechConfig.fromAuthorizationToken(tokenObj.authToken, tokenObj.region);

speechConfig.speechSynthesisVoiceName = translationLanguage;

//speechConfig.setProperty(speechsdk.PropertyId.SpeechServiceConnection_TranslationVoice, translationLanguage);

const myPlayer = new speechsdk.SpeakerAudioDestination();

updatePlayer(p => { p.p = myPlayer; return p; });

const audioConfig = speechsdk.AudioConfig.fromSpeakerOutput(player.p);

let synthesizer = new speechsdk.SpeechSynthesizer(speechConfig, audioConfig);

synthesizer.speakTextAsync(

translatedText,

result => {

//let text;

if (result.reason === speechsdk.ResultReason.SynthesizingAudioCompleted) {

//text = `synthesis finished for "${textToSpeak}".\n`

} else if (result.reason === speechsdk.ResultReason.Canceled) {

//text = `synthesis failed. Error detail: ${result.errorDetails}.\n`

}

synthesizer.close();

synthesizer = undefined;

//setDisplayText(text);

},

function (err) {

setDisplayText(`Error: ${err}.\n`);

synthesizer.close();

synthesizer = undefined;

});

}, [player, translatedText, translationLanguage]);

Translations using the Azure AI Speech SDK

Now that we can speak to our microphone, get the text as a string and convert it back to speech again, we can do some really cool scenarios. But if we could translate the spoken text to a different language as text and transform this translated text back to speech again, we have a real-time translator app!

Again from a code perspective the process is pretty much the same, using a speechConfig and audioConfig. For the Translation process we need to use the TranslationRecognizer object within the SDK. With this recognizer we can call the startContinuousRecognitionAsync method to start the translation process. Because we use the audioConfig fromDefaultMicrophoneInput method, you can start talking in your microphone and the translation events starts. The recognizer object as a recognized callback function where you get specify your own method, which is responsible to do something with the recognized translations. From a code perspective this could look something like this for a JavaScript frontend:

const doContinuousTranslation = useCallback(async () => {

const tokenObj = await getTokenOrRefresh();

const speechConfig = speechsdk.SpeechTranslationConfig.fromAuthorizationToken(tokenObj.authToken, tokenObj.region);

speechConfig.speechRecognitionLanguage = speakingLanguage;

speechConfig.addTargetLanguage(translationLanguage.substring(0, 5));

const audioConfig = speechsdk.AudioConfig.fromDefaultMicrophoneInput();

// Create the TranslationRecognizer and set up common event handlers.

const _recognizer = new speechsdk.TranslationRecognizer(speechConfig, audioConfig);

// Set up the recognized event to start capturing the recognized text.

_recognizer.recognized = onRecognized;

// Reset the display text

setDisplayText('');

// Reset the translation text

setTranslatedText('');

// Start the continuous recognition/translation operation.

_recognizer.startContinuousRecognitionAsync();

// Set recognizer value to state so we can stop it later

setRecognizer(_recognizer);

// Set translating to true so we can show the user we are translating

setTranslating(true);

}, [speakingLanguage, translationLanguage, onRecognized]);

The onRecognized callback function could look like this:

const onRecognized = useCallback((sender, recognitionEventArgs) => {

onRecognizedResult(recognitionEventArgs.result);

}, [onRecognizedResult]);

And the onRecognizedResult like this:

const onRecognizedResult = useCallback((result) => {

if (result.text && result.text !== ".") {

setDisplayText(prevState => { return `${prevState}${result.text}\r\n` });

}

if (result.translations) {

var resultJson = JSON.parse(result.json);

resultJson['privTranslationPhrase']['Translation']['Translations'].forEach(

function (translation) {

setTranslatedText(prevState => { return `${prevState}${translation.Text}\r\n` });

});

}

}, []);

Here we pass the result from the recognitionEvent to our own function to set the translation in a state constant. The result contains both the spoken text (result.text) as well as the translated text. We need to parse that from the result json property. This contains an array of translations within the privTranslationPhrase array. We also set this to a state constant.

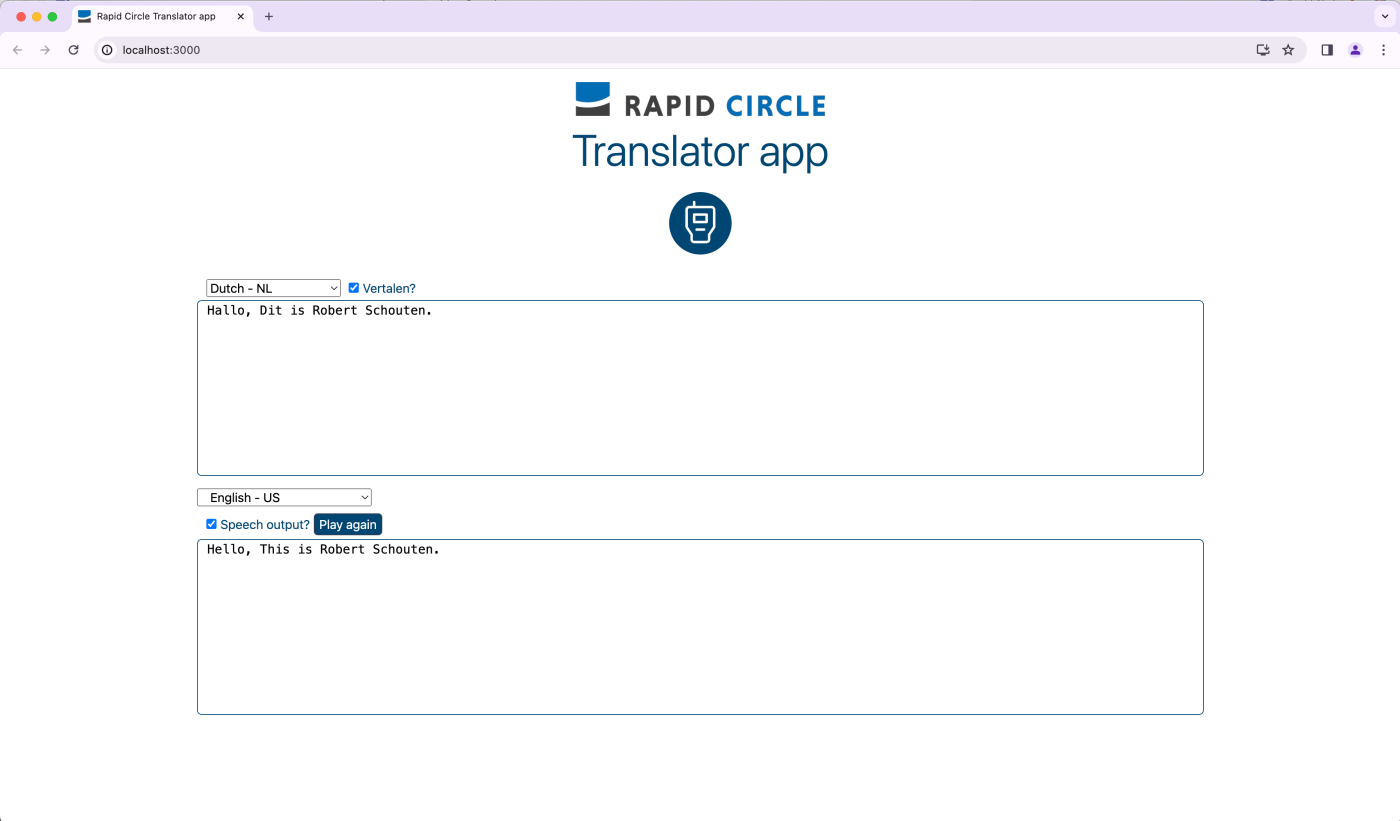

Using the above methods in a React web app could look something like this:

Conclusion

Setting up and using the Azure AI Speech service is a breeze. With the Azure AI Speech SDK, available in your preferred programming language, you can quickly add speech and translation capabilities (in over 30 languages) to your existing or new app. The Azure AI Speech SDK takes care of the technical details, such as accessing your microphone and speakers, recognizing your voice, and converting it to text or translating it into another language. Give it a try and let me know about the amazing things you’ve created with it.

Happy coding!

1 Pingback